We ❤️ Open Source

A community education resource

6 limitations of AI code assistants and why developers should be cautious

Consider these drawbacks of AI coding tools before using them.

Non-programmers have been hoping to democratize coding for a while now. Software development has always been a discipline that requires a lot of experience and skill, but artificial intelligence (AI) treats it like anything anyone can do. Suggesting that our workflow could become smoother and work more efficiently so the common mistakes in our code would be reduced. It’s exciting to think about the potential these tools hold!

What does AI truly signify for us as developers? How can we harness its capabilities while remaining aware of any drawbacks? Let’s take a moment to examine how these tools perform (and sometimes fall short) in practice.

Is AI really helpful or is it taking the joy out of programming?

It may seem like we’ve seen the golden age of AI, by which software development may become “easy mode” for the rest of time, is it? In contrast, these technologies are still far from ideal.

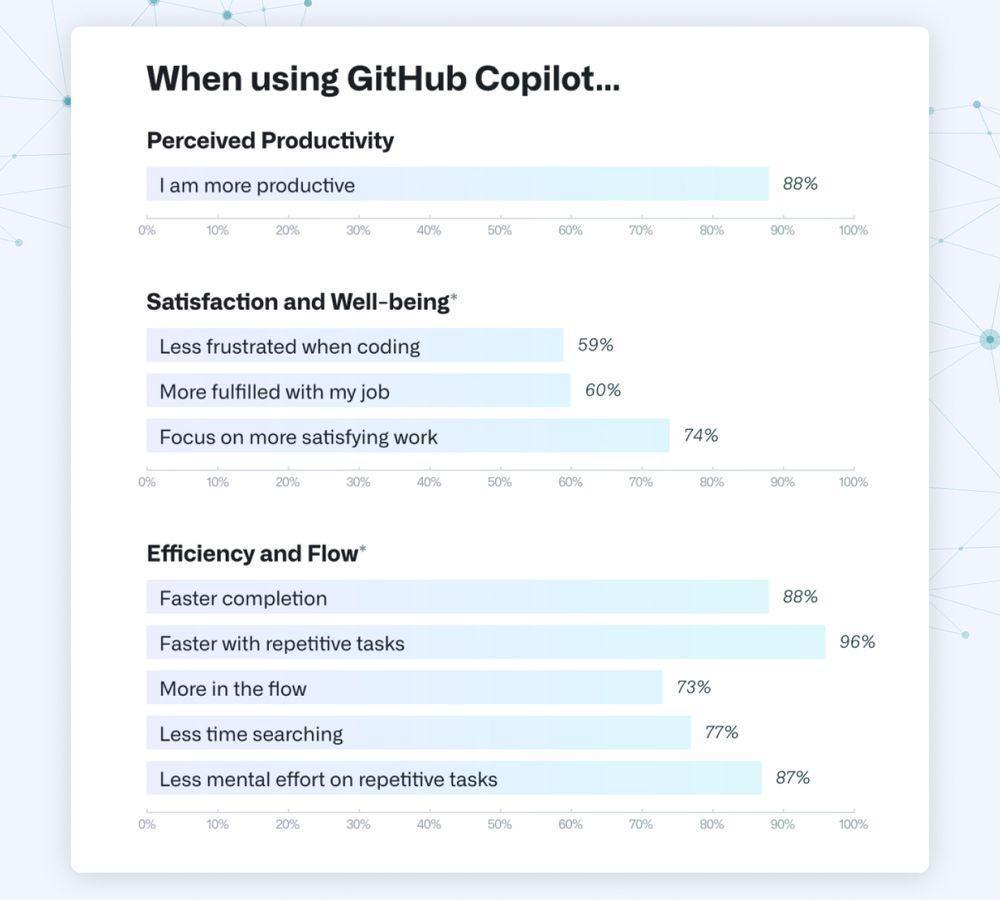

Approximately 60–75% of GitHub Copilot users report that Copilot adds interest to their tasks by relieving coding anxiety and giving them more time for enjoyable tasks. In addition, a study at Harvard Business School [PDF] finds productivity gains of 17-43% for a developer using AI.

Tools like Copilot are also seamlessly integrated into popular platforms like Visual Studio Code and IntelliJ IDEA, allowing developers to use these features without altering their workflows. Yet, there is a difficulty along the way, and the real effect would depend on the application of tools by developers.

Let’s explore the real benefits and limitations of AI coding assistants to give developers a clear picture of what to expect.

Read more: Comparing GitHub Copilot and Codeium

The allure of AI coding assistants

AI coding assistants are designed to automate ordinary programming-related tasks such as code suggestion, completion, and creation of entire code blocks. These tools are powered by machine learning models trained on massive amounts of public code and can predict what a developer might require to go next.

The below chart shows how the Gen AI code assistant market is growing.

According to Microsoft, MIT, and Princeton, developers who used Copilot sped up their workflow by 26%. Also, as an example, a McKinsey paper observed that code generation, refactoring, and documentation tasks increased in a 20-50% completion time using AI.

Developers are now expected to go beyond mere coding; they must grasp the intricacies of system architecture and ensure long-term code quality. Refactoring more often involves comparing the two states of a codebase and quite complex work, usually, but this essential activity will help keep and improve the code quality. Refactoring a codebase is an important task, the performance of which is rather difficult, requiring consideration, especially in large or legacy systems where the code might be heavily complicated and entangled with quite a load of technical debt.

AI provides suggestions on how to improve the code structure and eliminate dead code. Here are some key refactoring steps:

- Remove dead code: Focus on eliminating unused or redundant code to streamline the application.

- Best practices: Follow the AI suggestions to follow known patterns and componentize the code to include modularity, separation of concerns, code organization, maintainability, scalability, and more.

AI code generators can potentially change the game of development workflows, but they also raise ethical issues about accountability, bias, intellectual property, and the future of work. Let’s explore the ethical facets of using AI code generators, the challenges it poses, and how it impacts developers, companies, and society at large.

The figure below shows the AI code generation ethics the new role AI assistants can play and what challenges we may face.

Let’s take a look at some case studies where AI has helped in large legacy code refactoring:

Financial services companies like Capital One and Goldman Sachs applied AI-based refactoring to their core banking systems. Capital One was facing a challenge with migrating core banking systems to a microservice architecture; they have employed AI tools to identify dependencies, risks, and areas of improvement. AI tools assisted them in the automated refactoring of code modules into smaller, more manageable microservices. They have seen improved system agility, reduced time to market, and enhanced scalability.

Similarly, Goldman Sachs faced a challenge in modernizing risk management systems; they moved to AI to analyze vast amounts of financial data, and they have used this data to refactor risk models, improve accuracy, and automate compliance, and the result was awesome! They have witnessed enhanced risk management, reduced operational costs, and improved regulatory compliance.

Additionally, ERP platforms like SAP started using AI to analyze customer usage and identify areas of improvement in the software functionality. Refactoring the codebase helped them enhance the user experience, improve performance, and increase product innovation.

Nevertheless, although their promise is huge, their feasibility may not be as obvious as it sounds (i.e., practicality may not be as profound as it is typically represented).

AI coding assistants are still in their early stages

It’s easy to see why AI assistants are appealing. According to Gartner, organizations adopting AI-based tools report up to a 30% reduction in repetitive work. Tools such as Copilot help speed up the coding process, reduce the incidence of coding errors, and get away from creative problem-solving tasks. But are these benefits as transformative as they seem?

Let’s explore some playful, yet valid reasons why developers might not always feel the magic.

1. Context over code

Developers develop individual projects with individual business intelligence, business rules, and business objectives. AI assistants, brilliant at syntax and semantics, are poor at contextual intelligence. For example:

- On Reddit, a developer shared an amusing Copilot suggestion that turned a simple sorting function into an unnecessarily complex code block. The response? Nice try, AI, but let me handle the logic.

This constraint makes developers spend more time fine-tuning AI-generated code than writing it from scratch for that purpose.

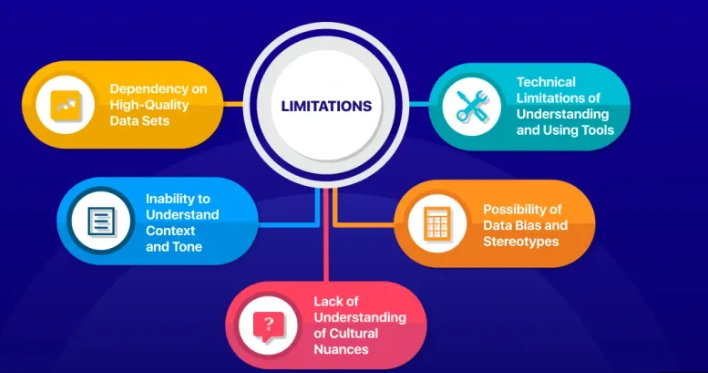

2. The training data dilemma

AI coding assistants rely on public codebases for training. Although breadth is afforded, quality and reliability are not guaranteed. Common pitfalls include:

- Suggestions based on outdated libraries.

- Recommendations that violate best practices or security protocols.

- Duplicate code that may inadvertently infringe on open source licenses.

Developers need to double-check every suggestion—negating the intended time savings.

3. Creativity isn’t easily automated

Coding is more than just syntax; it’s problem-solving, design, and creativity. AI assistants can’t think critically or innovate. Let’s take a look at a Twitter discussion:

“AI coding tools are like karaoke machines. They can sing the song, but they don’t write the lyrics.”

For tasks that need originality, such as designing complex algorithms or creating scalable architectures, developers are irreplaceable. AI can suggest boilerplate code, but it cannot ideate or strategize.

4. Team collaboration and learning

AI tools often focus on individual productivity, but coding is inherently collaborative. In teams, discussions around code contribute to shared understanding and skill-building. AI-generated code can sometimes:

- Create inconsistencies in coding style.

- Lead to confusion if team members don’t understand or trust the AI-generated logic.

A study on Pew Research highlights the importance of peer learning in tech teams, emphasizing that over 60% of developers learn on the job by reviewing or discussing code. AI assistants, while helpful, cannot replicate this human interaction.

5. Dependency risk

Depending too much on AI tools may inadvertently reduce a developer’s skill proficiency. Like an example from Hacker News:

“I used Copilot for a week. Now I can’t write a basic loop without second-guessing myself!”

The risk of over-dependencies is real, especially for junior developers who may not yet have the confidence to challenge AI suggestions. Experienced developers, however, might find the tools redundant.

6. Ethics and accountability

Firstly, the AI algorithms are not accountable for errors that occur, nor can they provide transparency about their inner workings and operations. Thus, it might lead to coding which reinforces harmful stereotypes or promotes misinformation.

AI assistants are indeed tools, which gives the idea that they might not make decisions or come to a conclusion. Responsibilities for code quality, security, and compliance will always rest upon the developers. One of the most flagrant issues prevailing in AI-assisted coding is the aforementioned compromise of security through code generation without holding secure coding practices, unsafe dependencies, or lacking handling of sensitive data.

Let’s say the AI assistants are tools, but of course, decision-making is not an organization’s feature. Developers are responsible for maintaining quality, security, and compliance in code. The most obvious issues presented by AI-assisted coding are what security is compromised by writing non-secure code, using unsafe dependencies, or insufficiently handling sensitive data.

AI tools may heavily depend on open source code to produce code, which in turn may inject insecure libraries or out-of-date libraries that do not have vulnerabilities reviewed. The AI’s knowledge is further restricted to what is in its training data, meaning that some generated code might have been proven insecure, but it’s not included in its data yet.

LLM-enabled coding assistants that have access to the internal data of an organization are on the rise as organizations look to leverage AI’s power to expedite development. Due to this, it can unintentionally leak sensitive data, such as an API key, credential code, or proprietary algorithm, particularly if it is returning your data to the AI provider’s servers that it will process.

Read more: Best practices and tips for developers to integrate AI tools into their workflows

Getting the most out of AI tools

AI can be used to improve various aspects of software development. AI can analyze user behavior and feedback to provide insights into how software applications can be improved, leading to a more user-centric design and functionality.

AI can analyze user data to provide personalized experiences, such as content recommendations, user interface adjustments, and more. AI tools can be used to predict project timelines, allocate resources efficiently, and even prioritize tasks based on urgency and importance.

While AI assistants are broadly useful for developers of all levels, their benefits are much more likely to come for a junior developer learning the basics because the assistant will provide fast code snippets and explanations, syntax-in-error solutions, and serves as a readily available tutor; however, senior engineers usually gain much more benefit in terms of already automated repetitive tasks with optimization suggestions based on the analysis of their codes and rapid searching of relevant information in a larger codebase, which require a deeper understanding of the architecting and the context of the system.

To employ the potential of AI coding assistants, developers must use them wisely.

- Guide the AI: Productive development can be facilitated by directly editing and guiding the output of AI, rather than it being solely a source of output.

- Establish clear objectives: Teams must set specific goals for how they intend to use whatever AI tools exist, e.g., to speed up documentation or to extend successful testing.

- Combine artificial intelligence with human oversights: Automated testing, regular code reviews, and senior developer monitoring can help ensure that AI-generated code is of high quality.

- Experiment and share results: Organizations should promote A/B testing to see where AI technologies excel and share best practices across teams.

Read more: Building multiagent systems for intelligent test automation

Conclusion

Coding assistants are timely, valuable applications that can also help engineers be more productive and creative. However, they are not a replacement for human expertise. From the developers’ point of view, they should not be regarded as black boxes but as tools that enable the completion of a task, improve code quality, and introduce a new notion.

Along with the further advances of AI, we should also expect even smarter coding assistance systems. These tools are set to enhance contextualization, collaborative writing, and code quality. The development of the aforementioned advances and their intelligent use can steer the future of software development.

More from We Love Open Source

- Comparing GitHub Copilot and Codeium

- How to build a multiagent RAG system with Granite

- Build a local AI co-pilot using IBM Granite Code, Ollama, and Continue

- How AI and decentralized cloud services are shaping the future of open source

- Why AI won’t replace developers—And how mastering it will keep you ahead

The opinions expressed on this website are those of each author, not of the author's employer or All Things Open/We Love Open Source.